#操作系统:win7 64位

#Python版本:3.5.2

#Python 3.5.2 (v3.5.2:4def2a2901a5, Jun 25 2016, 22:18:55) [MSC v.1900 64 bit (AM

#D64)] on win32

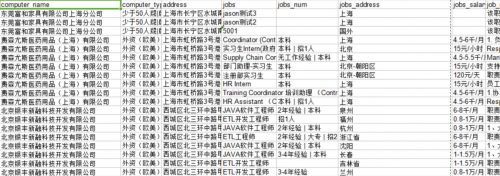

#效果图:

#功能:爬取列表页面,和相对应职位的详细页面

# coding=utf-8

import xlwt

import requests

from lxml import etree

import time

all_info_list = []

def get_info(url):

job_infos = []

job_msg_1 = ''

work_address = ''

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/53.0.2785.143 Safari/537.36'

}

html = requests.get(url,headers=headers)

selector = etree.HTML(html.text)

error = selector.xpath('/html/body/div[2]/div[2]/div[1]/div/text()')

if len(error):

pass

else:

computer_name = selector.xpath('/html/body/div[2]/div[2]/div[2]/div/h1/text()')

if len(computer_name):

computer_name = computer_name[0].encode('latin1').decode('gb2312')

else:

computer_name = "null"

computer_type = selector.xpath('/html/body/div[2]/div[2]/div[2]/div/p[1]/text()')

if len(computer_type):

computer_type = computer_type[0].encode('latin1').decode('GBK')

computer_type=computer_type.replace(' ', '')

computer_type = computer_type.replace('\t', '')

else:

computer_type = "null"

address = selector.xpath('/html/body/div[2]/div[2]/div[3]/div[2]/div/p/text()')

if len(address):

try:

address = address[1].encode('latin1').decode('gb2312')

address = address.replace(' ', '')

address = address.replace('\t', '')

except UnicodeDecodeError:

pass

else:

address = "null"

infos = selector.xpath('//*[@id = "joblistdata"]/div[*]')

if len(infos) != 0:

for info in infos:

jobs = info.xpath('p/a/text()')

if len(jobs) != 0:

jobs = jobs[0].encode('latin1').decode('gb2312')

else:

pass

jobs_num = info.xpath('span[1]/text()')

if len(jobs_num) > 0:

jobs_num = jobs_num[0].encode('latin1').decode('gb2312')

else:

pass

jobs_address = info.xpath('span[2]/text()')

if len(jobs_address) > 0:

jobs_address = jobs_address[0].encode('latin1').decode('gb2312')

else:

pass

jobs_salary = info.xpath('span[3]/text()')

if len(jobs_salary) > 0:

jobs_salary = jobs_salary[0].encode('latin1').decode('gb2312')

else:

pass

jobs_href = info.xpath('p/a/@href')

if len(jobs_href):

jobs_href = jobs_href[0].encode('latin1').decode('gb2312')

html_1 = requests.get(jobs_href)

selector_1 = etree.HTML(html_1.text)

job_msg = selector_1.xpath('/html/body/div[3]/div[2]/div[3]/div[2]/div/p/text()')

if len(job_msg) !=0:

job_msg_1 = ''

for job_msg_num in range(len(job_msg)):

try:

job_msg_2 = job_msg[job_msg_num].encode('latin1').decode('gb2312')

job_msg_1 += job_msg_2

except UnicodeDecodeError:

pass

else:

job_msg_br = selector_1.xpath('//*[@class="bmsg job_msg inbox"]//text()')

job_msg_1 = ''

for job_msg_num in range(len(job_msg_br)):

try:

job_msg_2 = job_msg_br[job_msg_num].encode('latin1').decode('gb2312')

job_msg_1 += job_msg_2

except UnicodeDecodeError:

pass

work_address= selector_1.xpath('/html/body/div[3]/div[2]/div[3]/div[3]/div/p/text()')

if len(work_address):

work_address = work_address[1].encode('latin1').decode('gb2312')

else:

work_address = "null"

else:

jobs_href = "null"

data = {

'jobs':jobs,

'jobs_num':jobs_num,

'jobs_address':jobs_address,

'jobs_salary':jobs_salary,

'job_msg_1':job_msg_1,

'work_address':work_address,

}

job_infos.append(data)

for job_info in range(len(job_infos) - 1):

info_list = [computer_name, computer_type, address, job_infos[job_info]['jobs'],

job_infos[job_info]['jobs_num'], job_infos[job_info]['jobs_address'],

job_infos[job_info]['jobs_salary'], job_infos[job_info]['job_msg_1'],

job_infos[job_info]['work_address']]

all_info_list.append(info_list)

print (all_info_list)

else:

pass

time.sleep(1)

if __name__ == '__main__':

urls = ['http://jobs.51job.com/all/co{}.html'.format(number) for number in range(101, 501)]

for url in urls:

get_info(url)

header = ['computer_name','computer_type','address','jobs','jobs_num','jobs_address','jobs_salary','job_msg_1','work_address']

book = xlwt.Workbook(encoding='utf-8')

sheet = book.add_sheet('Sheet1')

for h in range(len(header)):

sheet.write(0, h, header[h])

i = 1

for list in all_info_list:

j = 0

for data in list:

sheet.write(i, j, data)

j += 1

i += 1

book.save('51job_test_101-500.xls')

通过Python爬虫,扒一扒51job网站上的公司以及职位信息

通过Python爬虫,扒一扒51job网站上的公司以及职位信息