本操作文档基于Docker来实现:

系统环境:

[root@node100 ~]# rpm -qa | grep docker

docker-ce-cli-20.10.9-3.el7.x86_64

docker-ce-rootless-extras-20.10.9-3.el7.x86_64

docker-scan-plugin-0.8.0-3.el7.x86_64

docker-ce-20.10.9-3.el7.x86_64

[root@node100 ~]# uname -a

Linux node100 4.4.219-1.el7.elrepo.x86_64 #1 SMP Sun Apr 12 16:13:06 EDT 2020 x86_64 x86_64 x86_64 GNU/Linux

[root@node100 ~]# cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

docker软件安装:

设置yum仓库

安装必要依赖包

$ sudo yum install -y yum-utils device-mapper-persistent-data lvm2

添加阿里镜像稳定版仓库

$ sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

安装Docker-CE

1 安装最新版

sudo yum install -y docker-ce

启动Docker服务

sudo systemctl start docker

sudo systemctl enable docker

为docker设置国内镜像

[root@localhost ~]# vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://registry.docker-cn.com"]

}

[root@localhost ~]# systemctl restart docker.service

下载docker相关的镜像:

docker pull mysql:5.7

docker pull consul:latest

docker pull actiontech/dtle:latest

下载后的镜像清单:

[root@node100 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mysql 5.7 8a8a506ccfdc 16 hours ago 448MB

consul latest adb24f6d4612 13 days ago 116MB

actiontech/dtle latest c4d3c49ed3ad 3 months ago 834MB

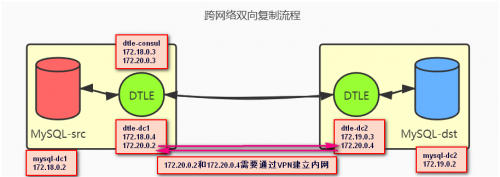

以下步骤以docker容器的方式快速演示如何搭建MySQL的跨数据中心的双向复制.

创建两个网络

docker network create dtle-net-dc1

docker network create dtle-net-dc2

在两个网络中分别创建MySQL数据库实例:

docker run --name mysql-dc1 -e MYSQL_ROOT_PASSWORD=pass -p 33061:3306 --network=dtle-net-dc1 -d mysql:5.7 --gtid-mode=ON --enforce-gtid-consistency=1 --log-bin=bin --server-id=1

docker run --name mysql-dc2 -e MYSQL_ROOT_PASSWORD=pass -p 33062:3306 --network=dtle-net-dc2 -d mysql:5.7 --gtid-mode=ON --enforce-gtid-consistency=1 --log-bin=bin --server-id=2

检查MySQL是否启动成功:

[root@node100 ~]# mysql -h 127.0.0.1 -P 33061 -uroot -ppass -e "select @@version\G"

*************************** 1. row ***************************

@@version: 5.7.35-log

[root@node100 ~]# mysql -h 127.0.0.1 -P 33062 -uroot -ppass -e "select @@version\G"

*************************** 1. row ***************************

@@version: 5.7.35-log

在两个网络中分别创建DTLE:

docker run --name dtle-consul -p 8500:8500 --network=dtle-net-dc1 -d consul:latest

docker run --name dtle-dc1 -p 4646:4646 --network=dtle-net-dc1 -d actiontech/dtle

# dtle-dc2 will work as a client only. No need to start consul-dc2.

docker run --name dtle-dc2 -p 5646:4646 --network=dtle-net-dc2 -d actiontech/dtle

将两个DTLE通过公网连通:

docker network create dtle-net-public

docker network connect dtle-net-public dtle-dc1

docker network connect dtle-net-public dtle-consul

docker network connect dtle-net-public dtle-dc2

现在容器中的主机网络如下:

[root@node100 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

7ca4f490d4c4 actiontech/dtle "/dtle/usr/bin/nomad…" 2 hours ago Up About an hour 8190/tcp, 0.0.0.0:5646->4646/tcp, :::5646->4646/tcp dtle-dc2

46d4d92a3911 actiontech/dtle "/dtle/usr/bin/nomad…" 2 hours ago Up About an hour 0.0.0.0:4646->4646/tcp, :::4646->4646/tcp, 8190/tcp dtle-dc1

9f5d1987a4bf consul:latest "docker-entrypoint.s…" 2 hours ago Up 2 hours 8300-8302/tcp, 8301-8302/udp, 8600/tcp, 8600/udp, 0.0.0.0:8500->8500/tcp, :::8500->8500/tcp dtle-consul

3c0503535c8a mysql:5.7 "docker-entrypoint.s…" 2 hours ago Up 2 hours 33060/tcp, 0.0.0.0:33062->3306/tcp, :::33062->3306/tcp mysql-dc2

cbb729c71787 mysql:5.7 "docker-entrypoint.s…" 2 hours ago Up 2 hours 33060/tcp, 0.0.0.0:33061->3306/tcp, :::33061->3306/tcp mysql-dc1

mysql-dc1:

[root@node100 ~]# docker inspect cbb729c71787 | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "172.18.0.2",

mysql-dc2:

[root@node100 ~]# docker inspect 3c0503535c8a | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "172.19.0.2",

dtle-dc1:服务端+客户端

[root@node100 ~]# docker inspect 46d4d92a3911 | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "172.18.0.4",

"IPAddress": "172.20.0.2",

dtle-consul:

[root@node100 ~]# docker inspect 9f5d1987a4bf | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "172.18.0.3",

"IPAddress": "172.20.0.3",

dtle-dc2:客户端

[root@node100 ~]# docker inspect 7ca4f490d4c4 | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "172.19.0.3",

"IPAddress": "172.20.0.4",

修改DTLE的Nomad配置:

修改容器dtle-dc1内的Nomad配置并重启(角色:服务端+客户端)

docker exec -u root -it dtle-dc1 vi /dtle/etc/dtle/nomad.hcl

[root@node100 ~]# docker exec -u root -it dtle-dc1 cat /dtle/etc/dtle/nomad.hcl

name = "nomad1" # rename for each node

datacenter = "dc1"

data_dir = "/dtle/var/lib/nomad"

plugin_dir = "/dtle/usr/share/dtle/nomad-plugin"

log_level = "Info"

log_file = "/dtle/var/log/nomad/"

disable_update_check = true

bind_addr = "172.20.0.2"

# change ports if multiple nodes run on a same machine

ports {

http = 4646

rpc = 4647

serf = 4648

}

addresses {

# Default to `bind_addr`. Or set individually here.

#http = "127.0.0.1"

#rpc = "127.0.0.1"

#serf = "127.0.0.1"

}

advertise {

http = "172.20.0.2"

rpc = "172.20.0.2"

serf = "172.20.0.2"

}

server {

enabled = true

bootstrap_expect = 1

}

client {

enabled = true

options = {

"driver.blacklist" = "docker,exec,java,mock,qemu,rawexec,rkt"

}

}

consul {

address = "dtle-consul:8500"

}

plugin "dtle" {

config {

log_level = "INFO"

data_dir = "/dtle/var/lib/nomad"

nats_bind = "172.20.0.2:8193"

nats_advertise = "172.20.0.2:8193"

# Repeat the consul address above.

consul = "dtle-consul:8500"

#api_addr = "127.0.0.1:8190" # for compatibility API

nomad_addr = "172.20.0.2:4646" # compatibility API need to access a nomad server

publish_metrics = false

stats_collection_interval = 15

}

}

docker exec -u root -it dtle-dc1 rm -rf /dtle/var/lib/nomad

docker restart dtle-dc1

其中:

由于dtle-dc1容器存在两个网络(与mysql通信的内网dtle-net-dc1,和与dtle-dc2通信的公网delt-net-public),需要指定bind_addr和advertise.rpc为本机的dtle-net-public的网络地址,此处为:172.20.0.2

修改容器dtle-dc2内的Nomad配置并重启:(角色:客户端)

docker exec -u root -it dtle-dc2 vi /dtle/etc/dtle/nomad.hcl

[root@node100 ~]# docker exec -u root -it dtle-dc2 cat /dtle/etc/dtle/nomad.hcl

name = "nomad2" # rename for each node

datacenter = "dc1"

data_dir = "/dtle/var/lib/nomad"

plugin_dir = "/dtle/usr/share/dtle/nomad-plugin"

log_level = "Info"

log_file = "/dtle/var/log/nomad/"

disable_update_check = true

bind_addr = "172.20.0.4"

# change ports if multiple nodes run on a same machine

ports {

http = 4646

rpc = 4647

serf = 4648

}

addresses {

# Default to `bind_addr`. Or set individually here.

#http = "127.0.0.1"

#rpc = "127.0.0.1"

#serf = "127.0.0.1"

}

advertise {

http = "172.20.0.4"

rpc = "172.20.0.4"

serf = "172.20.0.4"

}

server {

#重要!

#只有dtle-dc1作为server,dtle-dc2仅作为client

enabled = false

}

client {

enabled = true

options = {

"driver.blacklist" = "docker,exec,java,mock,qemu,rawexec,rkt"

}

}

consul {

address = "dtle-consul:8500"

}

plugin "dtle" {

config {

log_level = "INFO"

data_dir = "/dtle/var/lib/nomad"

nats_bind = "172.20.0.4:8193"

nats_advertise = "172.20.0.4:8193"

# Repeat the consul address above.

consul = "dtle-consul:8500"

#api_addr = "127.0.0.1:8190" # for compatibility API

nomad_addr = "172.20.0.4:4646" # compatibility API need to access a nomad server

publish_metrics = false

stats_collection_interval = 15

}

}

docker exec -u root -it dtle-dc2 rm -rf /dtle/var/lib/nomad

docker restart dtle-dc2

其中:

由于dtle-dc2容器存在两个网络(与mysql通信的内网dtle-net-dc2,和与dtle-dc1通信的公网dtle-net-public),需要指定bind_addr和advertise.rpc为本机的dtle-net-public的网络地址,此处为:172.20.0.4

检查dtle-dc1,dtle-dc2服务是否正常

dtle-dc1:

进行容器中执行:

[root@node100 ~]# docker exec -u root -it dtle-dc1 bash

curl -XGET "172.20.0.2:4646/v1/nodes" -s

dtle-dc2:

进行容器中执行:

[root@node100 ~]# docker exec -u root -it dtle-dc2 bash

curl -XGET "172.20.0.4:4646/v1/nodes" -s

如果有返回数据,表示正常

或者通过web ui,确定我们构建了一个 1个server 2个client的nomad环境

配置dc1到dc2的复制:

获取mysql-dc1的GTID:

[root@node100 ~]# mysql -h 127.0.0.1 -P33061 -uroot -ppass -e "show master status\G" | grep "Executed_Gtid_Set"

Executed_Gtid_Set: 35010e32-2bf4-11ec-86c2-0242ac120002:1-5

准备文件cross-network-replication-dc1-2-dc2.json

[root@node100 ~]# cat cross-network-replication-dc1-2-dc2.json

{

"Job": {

"ID":"cross-network-replication-dc1-2-dc2",

"Datacenters": ["dc1"],

"TaskGroups": [{

"Name": "src",

"Tasks": [{

"Name": "src",

"Driver": "dtle",

"Constraints": [{

"LTarget": "${node.unique.name}",

"RTarget": "nomad1",

"Operand": "="

}],

"Config": {

"Gtid":"35010e32-2bf4-11ec-86c2-0242ac120002:1-5",

"ReplicateDoDb": [{

"TableSchema": "demo",

"Tables": [{

"TableName": "demo_tbl"

}]

}],

"ConnectionConfig": {

"Host":"mysql-dc1",

"Port": 3306,

"User": "root",

"Password": "pass"

}

}

}]

}, {

"Name": "dest",

"Tasks": [{

"Name": "dest",

"Driver": "dtle",

"Constraints": [{

"LTarget": "${node.unique.name}",

"RTarget": "nomad2",

"Operand": "="

}],

"Config": {

"ConnectionConfig": {

"Host":"mysql-dc2",

"Port": 3306,

"User": "root",

"Password": "pass"

}

}

}]

}]

}

}

其中定义了:

源端/目标端的连接字符串

要复制的表为demo.demo_tbl

GTID点位,表示此复制是增量复制(双向复制只支持增量复制)

源任务(src)配置在dc1的dtle节点上执行(通过Constraints指定)

目标任务(dest)配置在dc2的dtle节点上执行(通过Constraints指定)

创建dc1到dc2的复制任务:这个在服务端执行也就是dtle-dc1上执行

[root@node100 ~]# docker exec -u root -it dtle-dc1 bash

[root@46d4d92a3911 dtle]#curl -XPOST "http://172.20.0.2:4646/v1/jobs" -d @cross-network-replication-dc1-2-dc2.json -s

查看作业状态:

[root@46d4d92a3911 dtle]#curl -XGET "http://172.20.0.2:4646/v1/job/cross-network-replication-dc1-2-dc2" -s

配置dc2到dc1的复制:

获取mysql-dc2的GTID:

[root@node100 ~]# mysql -h 127.0.0.1 -P33062 -uroot -ppass -e "show master status\G" | grep "Executed_Gtid_Set"

Executed_Gtid_Set: 3a3efef8-2bf4-11ec-8f05-0242ac130002:1-5

准备文件cross-network-replication-dc2-2-dc1.json

[root@node100 ~]# cat cross-network-replication-dc2-2-dc1.json

{

"Job": {

"ID":"cross-network-replication-dc2-2-dc1",

"Datacenters": ["dc1"],

"TaskGroups": [{

"Name": "src",

"Tasks": [{

"Name": "src",

"Driver": "dtle",

"Constraints": [{

"LTarget": "${node.unique.name}",

"RTarget": "nomad2",

"Operand": "="

}],

"Config": {

"Gtid":"3a3efef8-2bf4-11ec-8f05-0242ac130002:1-5",

"ReplicateDoDb": [{

"TableSchema": "demo",

"Tables": [{

"TableName": "demo_tbl"

}]

}],

"ConnectionConfig": {

"Host":"mysql-dc2",

"Port": 3306,

"User": "root",

"Password": "pass"

}

}

}]

}, {

"Name": "dest",

"Tasks": [{

"Name": "dest",

"Driver": "dtle",

"Constraints": [{

"LTarget": "${node.unique.name}",

"RTarget": "nomad1",

"Operand": "="

}],

"Config": {

"ConnectionConfig": {

"Host":"mysql-dc1",

"Port": 3306,

"User": "root",

"Password": "pass"

}

}

}]

}]

}

}

其中与dc1到gc2复制任务不同的是:

源端/目标端的连接字符串

GTID点位

源任务(src)配置在dc2的dtle节点上执行

目标任务(dest)配置在dc1的dtle节点上执行

创建dc2到dc1的复制任务:这个在服务端执行也就是dtle-dc1上执行

[root@node100 ~]# docker exec -u root -it dtle-dc1 bash

[root@46d4d92a3911 dtle]#curl -XPOST "http://172.20.0.2:4646/v1/jobs" -d @cross-network-replication-dc2-2-dc1.json -s

查看作业状态:

[root@46d4d92a3911 dtle]#curl -XGET "http://172.20.0.2:4646/v1/job/cross-network-replication-dc2-2-dc1" -s

测试:

在mysql-dc1数据库中创建数据库和表:

mysql -h 127.0.0.1 -P 33061 -uroot -ppass

Database changed

MySQL [demo]> show tables;

+----------------+

| Tables_in_demo |

+----------------+

| demo_tbl |

+----------------+

1 row in set (0.00 sec)

MySQL [demo]> show create table demo_tbl;

+----------+----------------------------------------------------------------------------------------------+

| Table | Create Table |

+----------+----------------------------------------------------------------------------------------------+

| demo_tbl | CREATE TABLE `demo_tbl` (

`id` int(11) DEFAULT NULL

) ENGINE=InnoDB DEFAULT CHARSET=latin1 |

+----------+----------------------------------------------------------------------------------------------+

1 row in set (0.00 sec)

MySQL [demo]> select * from demo_tbl;

+------+

| id |

+------+

| 1 |

| 2 |

| 3 |

| 4 |

| 5 |

| 6 |

| 7 |

| 8 |

+------+

8 rows in set (0.00 sec)

在mysql-dc2数据库中查看:(mysql-dc2中不需要创建,dtle会自动同步表结构和数据)

MySQL [(none)]> use demo;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

MySQL [demo]> select * from demo_tbl;

+------+

| id |

+------+

| 1 |

| 2 |

| 3 |

| 4 |

| 5 |

| 6 |

| 7 |

| 8 |

+------+

8 rows in set (0.00 sec)

在任何一个数据库(mysql-dc1,mysql-dc2)中对这张表进行DDL/DML操作,数据都会进行同步

数据冲突:

dtle不检测数据冲突,如果回放报错(如应数据冲突导致update了不存在的列),则job报错

其中,DML insert 使用replace回入,故insert 冲突时,效果是last-win

建议由业务确保数据不会冲突

DTLE跨数据中的的双向复制配置案例

DTLE跨数据中的的双向复制配置案例