安装环境:

物理机要求:

内存至少8G每台,CPU至少在I5以上的CPU,如果不满足要求,有可能安装失败

虚拟机:VMWARE 15.1.0

操作系统:rhel-server-7.0-x86_64

Grid集群安装包:linuxx64_12201_grid_home

DB数据库安装包:linuxx64_12201_database

[root@rhel7-111-rac1 ~]# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 7.0 (Maipo)

[root@rhel7-111-rac1 ~]# uname -a

Linux rhel7-111-rac1 3.10.0-123.el7.x86_64 #1 SMP Mon May 5 11:16:57 EDT 2014 x86_64 x86_64 x86_64 GNU/Linux

Oracle版本:Oracle 12.2.0.1

ASM磁盘划分:(1024G)

DATA:600G

DG_MGMT:200G

DG_OCR:200G

由于rhel没有注册,所以需要重新配置YUM源以及挂载本地安装源

删除RHEL原来的YUM软件,安装163的YUM源rpm -e yum --nodeps

rpm -e yum-langpacks-0.4.2-3.el7.noarch

rpm -e yum-utils-1.1.31-24.el7.noarch --nodeps

rpm -e yum-metadata-parser-1.1.4-10.el7.x86_64 --nodeps

rpm -e yum-rhn-plugin-2.0.1-4.el7.noarch --nodeps

rpm -e PackageKit-yum-0.8.9-11.el7.x86_64 --nodeps

wget http://mirrors.163.com/centos/7/os/x86_64/Packages/python-urlgrabber-3.10-10.el7.noarch.rpm

wget http://mirrors.163.com/centos/7/os/x86_64/Packages/rpm-4.11.3-45.el7.x86_64.rpm

wget http://mirrors.163.com/centos/7/os/x86_64/Packages/yum-3.4.3-168.el7.centos.noarch.rpm

wget http://mirrors.163.com/centos/7/os/x86_64/Packages/yum-plugin-fastestmirror-1.1.31-54.el7_8.noarch.rpm

wget http://mirrors.163.com/centos/7/os/x86_64/Packages/yum-metadata-parser-1.1.4-10.el7.x86_64.rpm

wget http://mirrors.163.com/centos/7/os/x86_64/Packages/yum-utils-1.1.31-54.el7_8.noarch.rpm

rpm -Uvh python-urlgrabber-3.10-10.el7.noarch.rpm

rpm -Uvh rpm-4.11.3-45.el7.x86_64.rpm --nodeps

[root@node111 yum_install]# rpm -ivh yum-3.4.3-168.el7.centos.noarch.rpm yum-plugin-fastestmirror-1.1.31-54.el7_8.noarch.rpm yum-metadata-parser-1.1.4-10.el7.x86_64.rpm yum-utils-1.1.31-54.el7_8.noarch.rpm

安装开发工具:

yum groupinstall 'Server with GUI' -y

yum groupinstall 'Hardware Monitoring Utilities' -y

yum groupinstall 'Large Systems Performance' -y

yum groupinstall 'Network file system client' -y

yum groupinstall 'Performance Tools' -y

yum groupinstall 'Compatibility Libraries' -y

yum groupinstall 'Development Tools' -y

挂载ISO文件:

需要登录图形界面后才会自动挂载磁盘文件

[root@node111 ~]# df -Th

文件系统 类型 容量 已用 可用 已用% 挂载点

/dev/mapper/rhel-root xfs 97G 3.2G 94G 4% /

devtmpfs devtmpfs 1.4G 0 1.4G 0% /dev

tmpfs tmpfs 1.4G 84K 1.4G 1% /dev/shm

tmpfs tmpfs 1.4G 8.8M 1.4G 1% /run

tmpfs tmpfs 1.4G 0 1.4G 0% /sys/fs/cgroup

/dev/sda1 xfs 497M 119M 379M 24% /boot

/dev/sr0 iso9660 3.5G 3.5G 0 100% /run/media/root/RHEL-7.0 Server.x86_64

[root@node111 ~]# mount -o loop -t iso9660 /dev/sr0 /mnt

[root@node111 ~]# cd /mnt

[root@node111 mnt]# ls

addons EULA images LiveOS Packages repodata RPM-GPG-KEY-redhat-release

EFI GPL isolinux media.repo release-notes RPM-GPG-KEY-redhat-beta TRANS.TBL

[root@node111 yum.repos.d]# pwd

/etc/yum.repos.d

[root@node111 yum.repos.d]# cat rhel.repo

[rhel]

baseurl=file:///mnt

enabled=1

gpgcheck=0

[root@node111 yum.repos.d]#yum makecache

配置openfiler,ISCSI磁盘制作(略)

参考:https://blog.51cto.com/zhaodongwei/1749759

配置ISCSI,挂载到本地做为磁盘使用(两台服务器都需要执行):

iscsiadm -m discovery -t sendtargets -p 172.16.1.110

[root@rhel7-111-rac1 ~]# cd /mnt/Server/

[root@rhel7-111-rac1 ~]# yum install iscsi-initiator-utils

[root@rhel7-111-rac1 ~]# service iscsid start

[root@rhel7-111-rac1 ~]# chkconfig iscsid on

[root@rhel7-111-rac1 ~]# chkconfig iscsi on

[root@node111 yum.repos.d]# iscsiadm -m discovery -t sendtargets -p 172.16.1.110

172.16.1.110:3260,1 iqn.2006-01.com.openfiler:tsn.4715ede47217.DG_OCR

172.16.1.110:3260,1 iqn.2006-01.com.openfiler:tsn.8b0295f08d99.DG_MGMT

172.16.1.110:3260,1 iqn.2006-01.com.openfiler:tsn.cd3236d92c30.data

挂载到本地做为磁盘使用

iscsiadm -m node -T iqn.2006-01.com.openfiler:tsn.4715ede47217.DG_OCR -p 172.16.1.110 --op update -n node.startup -v automatic

iscsiadm -m node -T iqn.2006-01.com.openfiler:tsn.8b0295f08d99.DG_MGMT -p 172.16.1.110 --op update -n node.startup -v automatic

iscsiadm -m node -T iqn.2006-01.com.openfiler:tsn.cd3236d92c30.data -p 172.16.1.110 --op update -n node.startup -v automatic

====

卸载磁盘:

iscsiadm -m node -U all

iscsiadm -m node -o delete -T iqn.2006-01.com.openfiler:tsn.8a10aa9821b7.fra

iscsiadm -m node -o delete -T iqn.2006-01.com.openfiler:tsn.1ccee8d6d76a.ocr

iscsiadm -m node -o delete -T iqn.2006-01.com.openfiler:tsn.92bfa9183352.data

====

[root@rhel7-111-rac1 ~]# service iscsi restart

Stopping iscsi: [ OK ]

[root@rhel7-111-rac1 ~]# service iscsi start

Starting iscsi: [ OK ]

查看当前会话连接的ISCSI Target:

[root@rhel7-111-rac1 ~]# iscsiadm -m node session

172.16.1.110:3260,1 iqn.2006-01.com.openfiler:tsn.8a10aa9821b7.fra

172.16.1.110:3260,1 iqn.2006-01.com.openfiler:tsn.1ccee8d6d76a.ocr

172.16.1.110:3260,1 iqn.2006-01.com.openfiler:tsn.92bfa9183352.data

172.16.1.110:3260,1 iqn.2006-01.com.openfiler:tsn.a6965e0fce9c

绑定UDEV共享磁盘

for i in b c d

do

echo "KERNEL==\"sd?\",SUBSYSTEM==\"block\", PROGRAM==\"/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/\$name\",RESULT==\"`/usr/lib/udev/scsi_id --whitelisted --replace-whitespace --device=/dev/sd$i`\", SYMLINK+=\"asm-disk$i\",OWNER=\"grid\", GROUP=\"asmadmin\",MODE=\"0660\"" >>/etc/udev/rules.d/99-oracle-asmdevices.rules

done

/sbin/partprobe /dev/sdb

/sbin/partprobe /dev/sdc

/sbin/partprobe /dev/sdd

查看磁盘详情:

/sbin/udevadm test /sys/block/sdb

/sbin/udevadm test /sys/block/sdc

/sbin/udevadm test /sys/block/sdd

[root@rhel7-112-rac1 ~]# /sbin/udevadm control --reload-rules

[root@rhel7-112-rac1 ~]# ll /dev/asm*

lrwxrwxrwx 1 root root 3 Apr 19 17:15/dev/asm-diskc -> sdc

lrwxrwxrwx 1 root root 3 Apr 19 17:16/dev/asm-diskd -> sdd

lrwxrwxrwx 1 root root 3 Apr 19 17:16/dev/asm-diske -> sde

lrwxrwxrwx 1 root root 3 Apr 19 17:16/dev/asm-diskf -> sdf

lrwxrwxrwx 1 root root 3 Apr 19 17:16/dev/asm-diskg -> sdg

[root@node112 ~]# ll /dev/sd*

brw-rw---- 1 root disk 8, 0 1月 19 08:21 /dev/sda

brw-rw---- 1 root disk 8, 1 1月 19 08:21 /dev/sda1

brw-rw---- 1 root disk 8, 2 1月 19 08:21 /dev/sda2

brw-rw---- 1 grid asmadmin 8, 16 1月 19 08:31 /dev/sdb

brw-rw---- 1 grid asmadmin 8, 32 1月 19 08:31 /dev/sdc

brw-rw---- 1 grid asmadmin 8, 48 1月 19 08:31 /dev/sdd

二:安装前准备

修改 /etc/selinux/config配置

[root@rhel7-111-rac1 ~]# vi /etc/selinux/config

SELINUX=disabled

关闭防火墙

[root@rhel7-111-rac1 ~]# systemctl stop firewalld

[root@rhel7-111-rac1 ~]# systemctl disable firewalld

配置内核参数:

[root@rhel7-111-rac1 ~]# vi /etc/sysctl.conf

fs.file-max = 6815744

kernel.sem = 250 32000 100 128

kernel.shmmni = 4096

kernel.shmall = 1073741824

kernel.shmmax = 4398046511104

kernel.panic_on_oops = 1

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 1048576

net.ipv4.conf.all.rp_filter = 2

net.ipv4.conf.default.rp_filter = 2

fs.aio-max-nr = 1048576

net.ipv4.ip_local_port_range = 9000 65500

修改生效:

[root@rhel7-111-rac1 ~]# /sbin/sysctl �Cp

修改系统限制参数

[root@rhel7-111-rac1 ~]# vi /etc/security/limits.conf

oracle soft nofile 1024

oracle hard nofile 65536

oracle soft nproc 16384

oracle hard nproc 16384

oracle soft stack 10240

oracle hard stack 32768

oracle hard memlock 134217728

oracle soft memlock 134217728

grid soft nofile 1024

grid hard nofile 65536

grid soft nproc 16384

grid hard nproc 16384

grid soft stack 10240

grid hard stack 32768

grid hard memlock 134217728

grid soft memlock 134217728

增加组和用户:

[root@rhel7-122-rac1 ~]# groupadd -g 54321 oinstall

[root@rhel7-122-rac1 ~]# groupadd -g 54322 dba

[root@rhel7-122-rac1 ~]# groupadd -g 54323 oper

[root@rhel7-122-rac1 ~]# groupadd -g 54324 backupdba

[root@rhel7-122-rac1 ~]# groupadd -g 54325 dgdba

[root@rhel7-122-rac1 ~]# groupadd -g 54326 kmdba

[root@rhel7-122-rac1 ~]# groupadd -g 54327 asmdba

[root@rhel7-122-rac1 ~]# groupadd -g 54328 asmoper

[root@rhel7-122-rac1 ~]# groupadd -g 54329 asmadmin

[root@rhel7-122-rac1 ~]# groupadd -g 54330 racdba

[root@rhel7-122-rac1 ~]# useradd -u 54321 -g oinstall -G dba,asmdba,backupdba,dgdba,kmdba,racdba,oper oracle

[root@rhel7-122-rac1 ~]# useradd -u 54322 -g oinstall -G asmadmin,asmdba,asmoper,dba grid

[root@rhel7-122-rac1 ~]# echo "oracle"|passwd --stdin oracle

[root@rhel7-122-rac1 ~]# echo "oracle"|passwd --stdin grid

增加ip地址:

[root@rhel7-122-rac1 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

# Public

172.16.1.111 rhel7-111-rac1.localdomain rhel7-111-rac1

172.16.1.112 rhel7-112-rac2.localdomain rhel7-112-rac2

# Virtual

172.16.1.121 rhel7-121-rac1-vip.localdomain rhel7-121-rac1-vip

172.16.1.122 rhel7-122-rac2-vip.localdomain rhel7-122-rac2-vip

# Private

10.1.1.111 rhel7-111-rac1-priv.localdomain rhel7-111-rac1-priv

10.1.1.112 rhel7-112-rac2-priv.localdomain rhel7-112-rac2-priv

#scan

172.16.1.188 rac-scan.localdomain rac-scan

修改主机名:

[root@node111 yum.repos.d]# hostnamectl set-hostname rhel7-111-rac1

[root@node112 yum.repos.d]# hostnamectl set-hostname rhel7-112-rac2

建立文件路径

[root@rhel7-122-rac1 ~]# mkdir -p /u01/app/12.2.0.1/grid

[root@rhel7-122-rac1 ~]# mkdir -p /u01/app/grid

[root@rhel7-122-rac1 ~]# mkdir -p /u01/app/oracle/product/12.2.0.1/db_1

[root@rhel7-122-rac1 ~]# chown -R grid:oinstall /u01

[root@rhel7-122-rac1 ~]# chmod -R 775 /u01/

[root@rhel7-122-rac1 ~]# chown -R oracle:oinstall /u01/app/oracle

修改grid用户的环境变量

[root@rhel7-111-rac1 ~]# su - grid

[grid@rhel7-111-rac1 ~]$ cd

增加以下内容:

[grid@rhel7-111-rac1 ~]$ vi .bash_profile

export ORACLE_BASE=/u01/app/grid

export ORACLE_HOME=/u01/app/12.2.0.1/grid

export ORACLE_SID=+ASM1 --注意rac2节点需要修改

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

umask=022

修改oracle用户的环境变量

[root@rhel7-111-rac1 ~]# su - oracle

[oracle@rhel7-111-rac1 ~]$ cd

增加以下内容:

[oracle@rhel7-111-rac1 ~]$ vi .bash_profile

export ORACLE_BASE=/u01/app/oracle

export ORACLE_HOME=/u01/app/oracle/product/12.2.0.1/db_1

export ORACLE_SID=orcl1 --注意rac2节点需要修改

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

umask=022

设置SSH信任关系

基本步骤:

1)设置rac1的rsa和dsa加密,然后都追加到authorized_keys文件中

2)再把rac1的authorized_keys拷贝到rac2中

3)同样rac2的rsa和dsa加密,然后把rac2的rsa和dsa加密追加到authorized_keys文件中

4)再把rac2的authorized_keys文件拷贝到rac1中,覆盖之前的authorized_keys文件

注1:这样的话rac1和rac2的authorized_keys文件中都有了彼此的rsa和dsa加密

注2:需设置grid和oracle两个用户(这里以oracle用户为例)

oracle用户配置:

1、Rac1服务器设置:

(1)设置rsa和dsa加密:

[oracle@rhel7-111-rac1 ~]$ ssh-keygen -t rsa

[oracle@rhel7-111-rac1 ~]$ ssh-keygen -t dsa

(2)把rsa和dsa加密都放置到authorized_keys文件中:

[oracle@rhel7-111-rac1 ~]$ cat .ssh/id_rsa.pub >> .ssh/authorized_keys

[oracle@rhel7-111-rac1 ~]$ cat .ssh/id_dsa.pub >> .ssh/authorized_keys

(3)把rac1的authorized_keys拷贝到rac2中:

[oracle@rhel7-111-rac1 ~]$ cd /home/oracle/.ssh

[oracle@rhel7-111-rac1 .ssh]$ scp authorized_keys 172.16.1.112:/home/oracle/.ssh/

2、Rac2服务器设置:

(1)设置rsa和dsa加密:

[oracle@rhel7-112-rac2 ~]$ ssh-keygen -t rsa

[oracle@rhel7-112-rac2 ~]$ ssh-keygen -t dsa

(2)把rac2的rsa和dsa加密都放置到从rac1拷贝来的authorized_keys文件中:

[oracle@rhel7-112-rac2 ~]$ cat .ssh/id_rsa.pub >> .ssh/authorized_keys

[oracle@rhel7-112-rac2 ~]$ cat .ssh/id_dsa.pub >> .ssh/authorized_keys

(3)把rac2中的authorized_keys文件拷贝到rac1中,覆盖之前的authorized_keys文件:

[oracle@rhel7-112-rac2 ~]$ cd/home/oracle/.ssh

[oracle@rhel7-112-rac2 .ssh]$ scp authorized_keys 172.16.1.111:/home/oracle/.ssh/

3、查看一下rac1和rac2相同的authorized_keys文件,如下:

[oracle@rhel7-111-rac1 .ssh]$ more authorized_keys

4、测试SSH:

Rac1和 rac2分别测试,可以正常返回日期即可:

[oracle@rhel7-111-rac1 ~]$ ssh rhel7-111-rac1 date

[oracle@rhel7-111-rac1 ~]$ ssh rhel7-112-rac2 date

[oracle@rhel7-112-rac2 ~]$ ssh rhel7-111-rac1 date

[oracle@rhel7-112-rac2 ~]$ ssh rhel7-112-rac2 date

grid用户配置也按oracle用户一样处理

grid用户配置:

1、Rac1服务器设置:

(1)设置rsa和dsa加密:

[grid@rhel7-111-rac1 ~]$ ssh-keygen -t rsa

[grid@rhel7-111-rac1 ~]$ ssh-keygen -t dsa

(2)把rsa和dsa加密都放置到authorized_keys文件中:

[grid@rhel7-111-rac1 ~]$ cat .ssh/id_rsa.pub >> .ssh/authorized_keys

[grid@rhel7-111-rac1 ~]$ cat .ssh/id_dsa.pub >> .ssh/authorized_keys

(3)把rac1的authorized_keys拷贝到rac2中:

[grid@rhel7-111-rac1 ~]$ cd /home/grid/.ssh

[grid@rhel7-111-rac1 .ssh]$ scp authorized_keys 172.16.1.112:/home/grid/.ssh/

2、Rac2服务器设置:

(1)设置rsa和dsa加密:

[grid@rhel7-112-rac2 ~]$ ssh-keygen -t rsa

[grid@rhel7-112-rac2 ~]$ ssh-keygen -t dsa

(2)把rac2的rsa和dsa加密都放置到从rac1拷贝来的authorized_keys文件中:

[grid@rhel7-112-rac2 ~]$ cat .ssh/id_rsa.pub >> .ssh/authorized_keys

[grid@rhel7-112-rac2 ~]$ cat .ssh/id_dsa.pub >> .ssh/authorized_keys

(3)把rac2中的authorized_keys文件拷贝到rac1中,覆盖之前的authorized_keys文件:

[grid@rhel7-112-rac2 ~]$ cd/home/grid/.ssh

[grid@rhel7-112-rac2 .ssh]$ scp authorized_keys 172.16.1.111:/home/grid/.ssh/

3、查看一下rac1和rac2相同的authorized_keys文件,如下:

[grid@rhel7-111-rac1 .ssh]$ more authorized_keys

4、测试SSH:

Rac1和 rac2分别测试,可以正常返回日期即可:

[grid@rhel7-111-rac1 ~]$ ssh rhel7-111-rac1 date

[grid@rhel7-111-rac1 ~]$ ssh rhel7-112-rac2 date

[grid@rhel7-112-rac2 ~]$ ssh rhel7-111-rac1 date

[grid@rhel7-112-rac2 ~]$ ssh rhel7-112-rac2 date

三:安装GRID软件

解压grid安装包

[grid@rhel7-112-rac1 ~]$ cd /u01/app/12.2.0.1/grid/

[grid@rhel7-112-rac1 grid]$ unzip linuxx64_12201_grid_home.zip

安装grid前检查

[grid@rhel7-112-rac1 grid]$ ./runcluvfy.sh stage -pre crsinst -n rhel7-111-rac1,rhel7-112-rac2 -verbose

yum install ksh libaio-devel -y

cvuqdisk安装包在cv/rpm grid文件安装目录下

[root@ehs-rac-01 shm]# cd /u01/soft/cv/rpm/

[root@ehs-rac-01 rpm]# rpm -ivh /u01/soft/cv/rpm/cvuqdisk-1.0.10-1.rpm

安装grid

[grid@node111 soft]$ export DISPLAY=172.16.1.1:0.0

[grid@rhel7-112-rac1 grid]$ ./gridSetup.sh

图形界面配置略

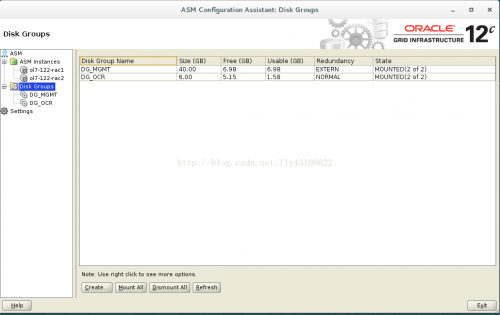

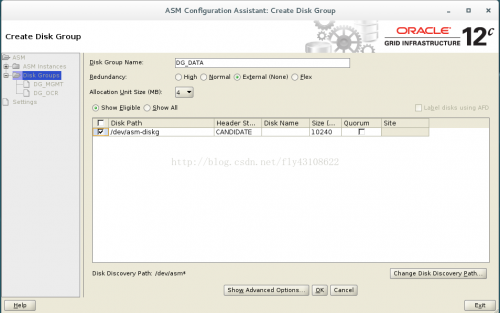

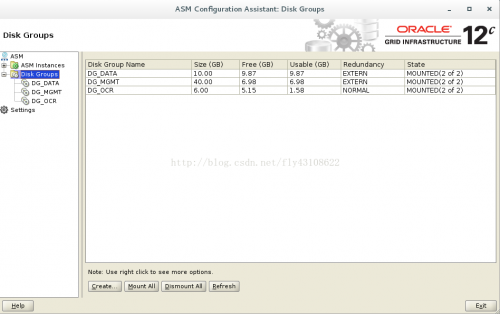

配置asm

[grid@rhel7-112-rac1 grid]$ asmca

创建DATA磁盘组:

安装ORACLE软件

解压oracle安装包

[grid@rhel7-111-rac1 soft]$ unzip linuxx64_12201_database.zip

[grid@rhel7-111-rac1 soft]$ export DISPLAY=172.16.1.1:0.0

[grid@rhel7-111-rac1 soft]$ ./runInstaller

图形界面略

安装数据库

[oracle@rhel-111-rac1 ~]$unzip linuxx64_12201_database.zip

[oracle@rhel-111-rac1 ~]$ dbca

图形界面略

查看集群状态

[grid@ol7-122-rac1 ~]$ srvctl config database -d orcl

Oracle12C-12.2.0.1-RAC-RHEL7.0-openfiler-VM安装配置

Oracle12C-12.2.0.1-RAC-RHEL7.0-openfiler-VM安装配置